Using calculated heading values to ensure videos are shown in 360 players facing the same direction as they were shot.

Now that we have the heading values for each frame in the video (see last weeks post), we can use them to adjust the yaw in our World Lock video to reverse the effect.

To do this, we can take exactly the same approach as I showed you for images in the post; Adjusting the yaw of an equirectangular 360 photo using ImageMagick.

That is;

- take the first heading reported in the calculated telemetry file, and assume that as the World Lock heading

- calculate the yaw adjustment needed using the calculation

true heading(reported in telemetry .json) -World Lock heading(calculated at step 1) for each frame - use this value with ffmpeg to adjust yaw

It’s easy to align frames to heading values, because we have a HEAD value (heading in degrees) for each frame (with date and cts times) reported in the updated telemetry file. All that’s needed is to iterate through frames and HEAD values to make the yaw adjustment.

I couldn’t find any documentation referencing if the v360 filter supports a time option, so our implementation works by first extracting the frames and applying the filter to them one-by-one.

My proposed ffmpeg workflow is as follows;

- Extract all the frames in the video using ffmpeg

ffmpeg -i <videopath> -r <frame_rate_str> -q:v 2 <extract_dir>/%9d.jpg

- Take the framerate information to compare against

ctsvalues in the telemetry to get the right sensor value for each frame - Take the first frames heading from the telemetry

HEADstream (the World Lock heading) - Take each frames heading value from the telemetry

HEADstream - Calculate the delta (frame

HEADvalue - first framesHEADvalue) - Adjust each frame using ffmpeg

v360filter with theyawZvalue set to the delta calculated at step 5 for each frameffmpeg -i <frame_path> -vf v360=input=e:e:yaw=Z <out_path>

- Rebuild the video from frames adjusted in the last step

ffmpeg -y -r <frame_rate_str> -f concat -safe 0 -i images.txt -c:v libx264 -vf "fps=<frame_rate_str>,format=yuv420p" <out.mp4>

- Copy the other video streams (audio and telemetry) to the newly processed video (with adjusted yaw) from the original video

Reviewing the original World Lock video I built this proof-of-concept for:

And now, here it is after adjusting for World Lock (or as I like to call it, UnWorld Lock):

It works!

However, you will notice it is shaky along the horizontal plane.

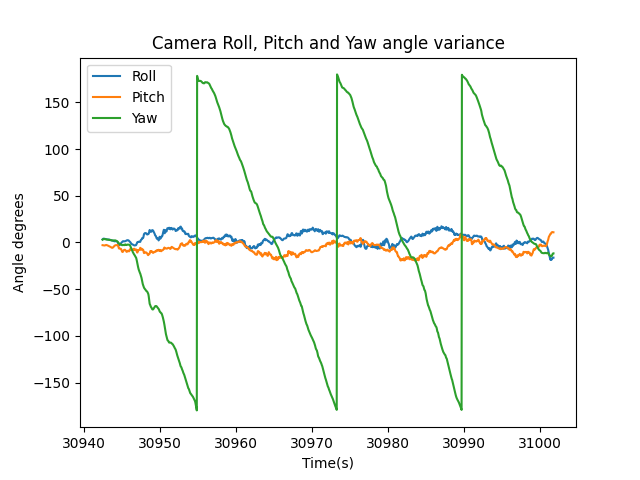

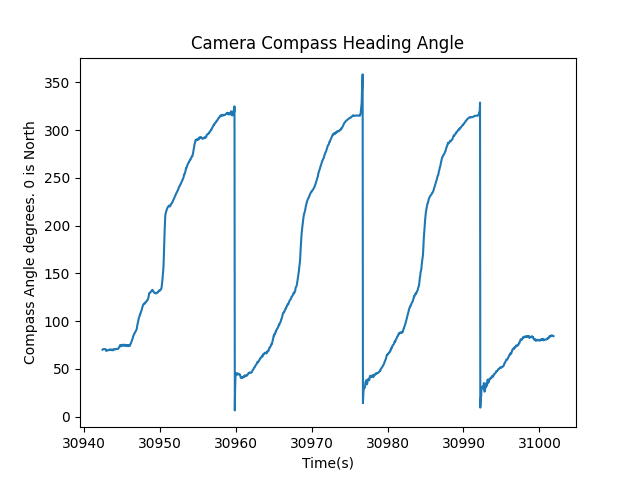

This is likely due to noise registered by the sensors in the camera (gyroscope, accelerometer, and magnetometer) used to perform the adjustment calculations shown below:

High and low pass filters

One simple, and probably quite effective solution would be to implement a low and/or high pass filter on the telemetry values used to calculate roll, pitch, yaw and heading values.

High and low pass filters, filter out or smooth the desired extreme frequencies (based and the high and low end values) found in the telemetry.

These types of filter are used by a lot of software, including the all current GoPro camera firmware and in GoPro’s post-processing tools.

Though it sounds simple, this topic gets complex very quickly. If I wanted to, I could use post-processing to stabilise horizontal jitter in the video (using the original telemetry). Whilst I won’t for this post, if I did, Gyroflow, in my limited experience, is a useful tool for non-360 action cameras (and supports a variety of cameras).

Is dynamic yaw adjustment only useful for World Lock mode?

When World Lock mode is not used, yaw=0 will always be the direction the front lens is facing.

Many smoothing algorithms that stabilise the footage, for example GoPro’s Hypersmooth, use yaw (z), roll (y), and pitch (x) as inputs. Having the whole panorama available means these algorithms can take advantage of these three planes combined to create the smoothest visual output.

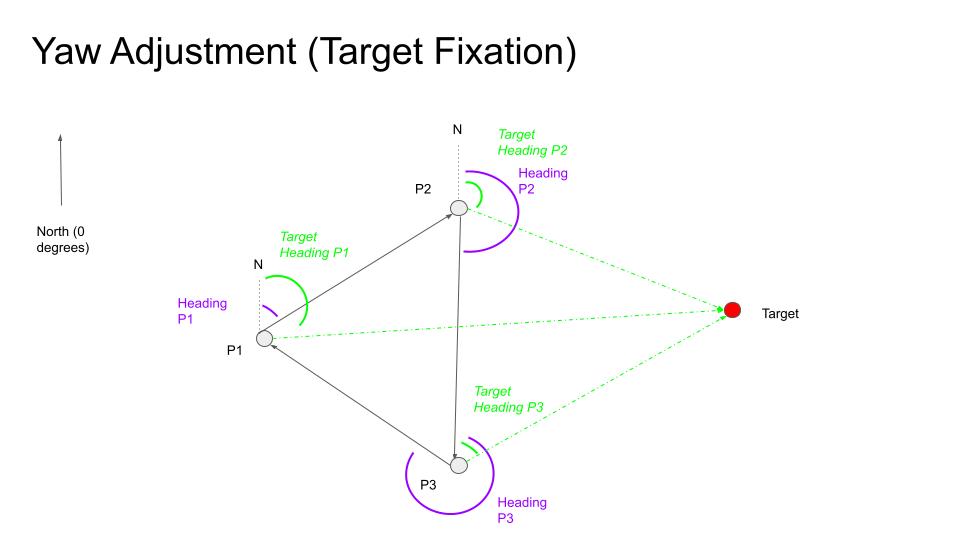

The other main use-case for dynamic yaw adjustment in non-World Lock modes is target fixation, a technique that is becoming increasingly popular.

For example, lets say in my video I wanted the camera to stay fixed on a car as I moved.

To do this, you need to know the position (GPS co-ordinates) of the object in relationship to the camera.

With these values, you can calculate the heading of the object from each frame.

With actual heading for each frame, and heading of the object you can then find the yaw offset (delta) value in the same way (actual heading - target heading).

tl;dr scripted proof-of-concept

The implementation discussed over the last 3 weeks was fully implemented by us as proof-of-concept code.

If you want to give it a try for yourself, check out our GoPro RPY repository on GitHub, which will perform the UnWorld Lock process described using the following command;

python3 main.py TELEMETRY.json --plot true --video_input VIDEO.mp4 --mode unworldlock

We're building a Street View alternative for explorers

If you'd like to be the first to receive monthly updates about the project, subscribe to our newsletter...